How Smart IoT Nodes at the Extreme Edge are Bringing Ultra- low Power Options to Embedded Vision Systems

A new way of developing SoCs for smart IoT nodes could offer a more flexible way of harnessing neural networks for ultra-low-power embedded vision.

Introduction

Historically, OEMs offering embedded vision have been obligated to rely mainly on cloud-based AI. Increasingly though, the cost-power-performance mix for these applications can be balanced more favorably by using powerful application processors at the IoT endpoints.

Even better, solutions are now becoming available that can bring embedded vision to smarter IoT nodes that integrate micro-controllers (MCUs), enabling low-power vi- sion applications like person detection, wake-on-approach, driver awareness and robotics.

This article will explain how it’s now possible to perform machine vision in IoT endpoints in the milliwatt range as a result of an approach to System on Chip (SoC) design that moves away from conventional MCU architecture while retaining the cost and flexibility benefits of MCUs.

Why Do We Need Smart IoT Nodes?

Let’s begin by considering a smart IoT node with a role in, for example, a person detection solution where it would acquire data from a camera sensor, perform sig- nal pro-cessing and feature extraction and run a machine learning algorithm.

A factor broadening the market for person detection has been the General Data Protection Regulation, or GDPR, with its prohibition on capturing images of people without permission. For example, an image of goods on a shelf, needed for inventory management, cannot be part of inferencing if that image includes humans.

To compete successfully OEMs offering people detection and other machine learning solutions that use inferencing must meet customer demands to tamp down data storage and communication costs while assuring security and privacy.

Inferencing at the smart IoT node is advantageous for a number of reasons. Storage costs shrink because only actionable data is sent to the cloud. Also, the price paid to the network operator decreases corresponding to data be- ing able to stay at the intelligent endpoint for inferencing rather than traveling to and from the cloud. In addition, data that need not travel to the cloud for inferencing does not risk security and privacy assaults or breaches during transfer. Latency is another bugaboo that would be avoid- ed as would the negative impact on real-time capability caused by sending da-ta to the cloud unnecessarily.

A Power vs Performance Conundrum

However, these boons to cost reduction, security, and privacy have to this point not been as avail- able as they could be. Smart IoT nodes are typically battery powered or rely on a limited power source, some- times using energy harvesting. If the person detection system in our example tried to rely just on traditional MCUs, as performance demands rose, power consumption would rise beyond the power capacity of the node. This would not be a suitable solution.

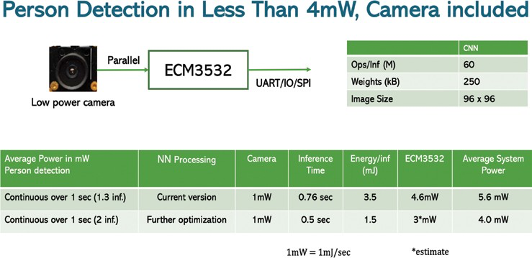

For example, note the Convolutional Neural Network (CNN) on Figure 1. Widely used in machine vision for object classification, CNNs comprise several layers. Convolutions and fully connected are the most cycleintensive operations, with heavy matrix multiply-accumulate (MMAC) use that MCUs are ill equipped to perform.

Attempts to get around the power-rise-with-high- er-performance dilemma while cling-ing to the traditional microcontroller idea have led to no shortage of various neural networks for microcontrollers. But until now bringing out production-grade solutions that overcome performance and power constraints to create a smarter IoT node has proven elusive.

The Steps to Machine Vision in the 1mW Range Taking on Workloads in Any Combination

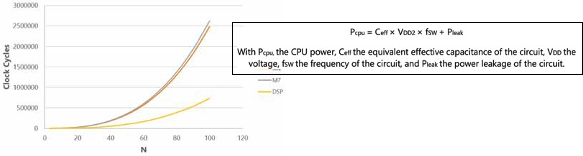

So, how to significantly improve upon the efficiency possible using direct implementation of neural networks on a standard microcontroller? One step is recognizing that smart IoT nodes face three workloads: a procedural one, another for digital signal processing, and a third for machine learning, making heavy use of MMAC operations.

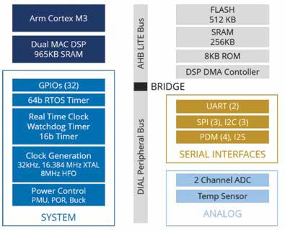

To target each workload’s unique demands, in the solution described here an Arm Cortex-M CPU handles the procedural load, while a dual MAC 16-bit DSP serves signal processing and machine learning needs. With this approach that takes full advantage of DSP benefits, doubling or even tripling neural network calculation performance

The Hybrid Multicore architecture used with this ap- proach can tackle workloads in any combination, including network stacks, RTOS, digital filters, time-frequency conversions, RNN, CNN, and traditional artificial intelligence like searches, decision trees, and linear regression.

Lowering Power Consumption to the mW Range Developing a hybrid multicore architecture that enables neural networks to run more efficiently by accounting for the differences in various workloads is one componentneeded for more power-efficient and higher performing embedded vision systems. An-other is applying a new patented design technology to decrease power.

Customizing for the Extreme Edge

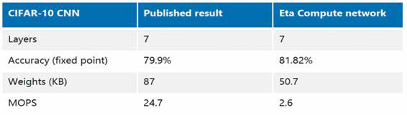

Design techniques and optimization efforts that are deliberately tailored for the unique needs of the extreme edge are yielding results. For example, Eta Compute now offers a production-grade neural sensor processor, the ECM3532 (Figure 3).

When the ECM3532 neural sensor processor powered a person detection model in a recent test (Figure 4), the test showed that the SoC could run the algorithm with an average power of 4.6mW, while the average system power was 5.6mW, including the camera – for an inference time of 0.7s (1.3 inference per second). We estimate that with further optimization, an average system power of 4mW can be reached with 2 inferences per second.

Just the Beginning

The capabilities that accrue from ultra-low power op- eration at the extreme edge can benefit applications that include people detection, as we have seen above, but also gaze detection for safer semi-autonomous driving, agri- cultural applications requiring accurate animal detection, people counting …the list goes on.

Free from the inefficiencies and latency associated with solutions where the heavy lift-ing must happen primari- ly in the cloud, OEMs will be able to offer customers in industrial, automotive, and consumer markets the leaps forward AI promises.